latest

Getting Real: Personalised medicine, how personal is too personal?

The themes of Keith Brooke’s story “Life’s Lottery” are varied and many of the technologies are subtly presented or implied. This is in some ways quite challenging for me to write about, as I often think some of the best technical solutions are the ones which are not particularly noticeable. If you think of the IT department in an organization, if you don’t notice them, generally they are doing a phenomenal job. That being said, the story describes a scenario of a state-wide health surveillance system which is both incredibly personal and possesses inherent biases and inequalities.

The overall concept in Life’s Lottery is not unlike the credit score system in the UK and is even closer to the social security and social credit system in China, which, to some is seen as sinister and ethically problematic. For those of you who’re not familiar with the technology being developed, this video from Vice Media demonstrates the implementations and some of the concerns related to the surveillance system being made, which seemingly unironically is named “Skynet”. The system, which is mostly related to security and detecting illegal activity, is also designed to publicly shame offenders, for acts which in the UK barely seem criminal, for example “jaywalking”.

In the story, any state perceived irresponsible behaviour affects Janie’s score and ranking on her Trace Bracelet, we don’t know exactly what Janie’s condition is, but we know she’s waiting for an operation. If the Chinese system above were linked to healthcare, which it may still be as the system is linked to state ID cards, this jaywalker could be facing significant healthcare consequences as a result of a hasty decision to cross a road, and in Janie’s situation, may have an operation delayed further.

The relationship of Life’s Lottery to the Chinese system is also useful to consider from a social mobility perspective. In Keith’s story, there is an undertone of societal inequality, where it is made clear that social structures and health provisions are difficult to progress in. This is highlighted as an issue in the Vice video, where public places are the main point of surveillance, but cameras and reporting systems are increasingly being deployed in government housing complexes, and it is unclear whether private landlords are using the technology linked to the state’s social system.

When we think of surveillance and ranking, the use of technology is important, but often it is the decision being made or signed off which is of the most significant ethical concern. If the decisions to surveil and encroach on freedoms are being made where technology is not involved, the use of a wider technological tool would only exacerbate such issues. Often it is not the technology per se causing issues, it is the human decision which causes an ethical problem. For example, St George’s Medical School was found to have used a racially and gender biased algorithm to select candidates for interviews. More recently we have seen examples of racial bias in medical treatment triage in the US. It is the human decision makers who need to be cognizant of these biases and consider issues in production, and appropriately stress test systems, particularly over time where techniques such as reinforcement learning are being applied. The medical and scientific community are working hard to reduce potential harm from AI systems and a set of guidelines were recently published for the use of AI systems in clinical trials.

Recent news from a health setting of a plan to track alcohol consumption in pregnancy has been met with concern over personal freedom and medical confidentiality. This approach is paper based and self-reported, however with wearable monitors such as an Apple watch or Fitbit, detecting behaviour is becoming easier as more data is collected and modelled for real world scenarios.

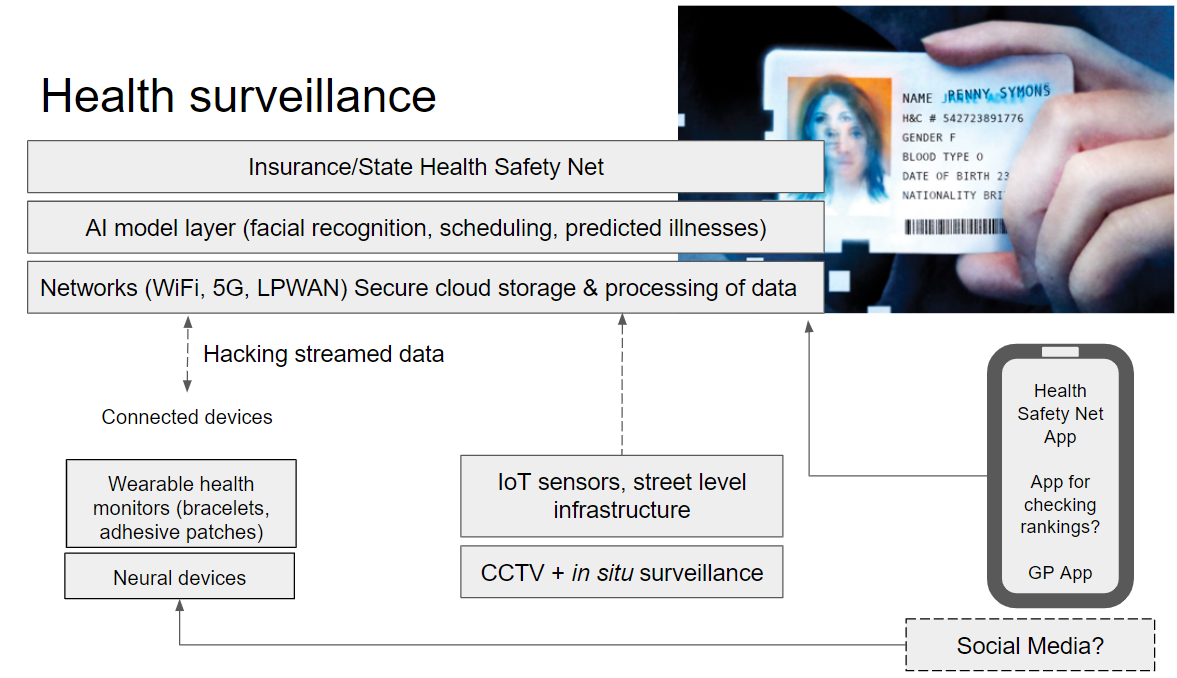

The diagram below sets out some of the technology which would enable the system in the story. This technology relies on Internet of Things (IoT) sensors and wearables, a huge network and storage infrastructure and a range of different algorithms and AI models to make use of the data. We often think of computer vision as one of the main tools for surveillance technology, however individuals can be identified from a combination of many different forms of data, whether location and social media, or clinical test results. Below is a visual description of the types of technology underpinning the story. I have included newer forms device, such as Elon Musk’s neural interface (Nuralink), which would likely contribute to Janie’s discomfort and potential paranoia over measured behaviours compared to thought detection.

In many surveillance scenarios, tracking people with cameras and use of facial recognition has begun a trend of physically “hacking” yourself to fool a facial recognition system. In the story, Janie and her friends are fooling the AI model using data stream modification methods, but the principle is largely the same. As more data is fed into the system though, fooling it can become more difficult and these methods would lose efficacy over time.

We see ranking systems and competition in the system, where huge amounts of multi-modal data are being used to develop a societal model for healthcare, viewed on Janie’s “Trace bracelet”. We use social media every day, for work and pleasure and many of these platforms have been scrutinized for their effect on mental health. A 2017 report from the Royal Society for Public Health explored the impact of different social media platforms on mental health and is useful to consider alongside Janie’s experience of a constant feed of information about her health and how this would interact with mental well-being and perception of self.

Through COVID19, we are seeing vast changes to ways we work and how we access healthcare services. There has been a sharp increase in adoption of health service technology, with 99% of GP visits being conducted through telemedicine systems and a 97% increase in the number of prescriptions being ordered through the NHS App. The emphasis through the early phases of the pandemic was on protecting the NHS from being overwhelmed, however there are growing concerns of backlogs for treatment and cases which have not yet been diagnosed, combined with a winter COVID19 peak this may have dramatic consequences for the UK’s population. Hence a concerted effort is needed to triaging and scheduling appointments, treatment, and testing, both for COVID19 and non-COVID19 illnesses. For the rationing and scheduling of these different forms of treatment it will be very important where the line is drawn between a human decision and an algorithmic decision. Often less well-off neighborhoods and BAME communities are disproportionately affected by COVID19 and, as we have seen already, are also often the victims of inadequate algorithmic systems.

Personalized medicine is a field which, since the advent of genome sequencing technology and many different biomarker tests, has developed rapidly. However, it is difficult to create an environment where so much is known about the individual without compromising privacy and confidentiality. The data from the DNA service 23andMe are shared in an R&D partnership with GSK, with the aim to cure diseases and discover new treatments. However, with a state-wide surveillance system it could be mandatory for any data about an individual to be made available for the model rankings.

It is arduous to consider the potential benefits of a statewide surveillance system, as there are so many major concerns. The political systems which typically promote such approaches are either described by Orwell, Huxley and Atwood, or are totalitarian regimes. Neither should be used as inspiration or be replicated as part of a plan for the future of healthcare. There are areas of clinical and social systems which could benefit from an overarching technical infrastructure for monitoring. This would be in functions where humans are not the subject of surveillance. Through COVID19, we have seen how vulnerable certain supply chains are and stock monitoring, procedure scheduling and clinical logistics would benefit from a technical upgrade. Better management of these systems with technology would potentially optimize budgets and provide a better health and care experience.

We have seen how surveillance, tracking behaviours and personal biometrics are all being met with public concern in a range of different contexts. The government has big decisions to make in the coming months about systems being developed related to COVID19, triaging a backlog of procedures, and rolling out vaccines. Hastily implemented algorithms, such as the one from Ofqual, have already had a significant impact during this period. We must carefully consider what should remain after COVID19 and what is only acceptable in a state of emergency.

"Algorithms have gradually replaced human decision making for a while now, in various areas of life, but we didn't know until recently the harms and damage they can cause. And as this process will continue, with automated decision making will make most of the decisions for us, in critical life situations like healthcare or education, how will we make sure they remain equitable, fair, transparent, and challenged when needed. Can we do it this or all is lost? And aren't we sleep walking into a world run by algorithms where survival means playing the system? Jury is still out.." Maria Axente, Responsible AI and AI for Good Lead, PwC UK